1. Characteristics of Generative AI

AI is a technology that mechanizes human thought. One significant difference from humans is its ability to rapidly process large amounts of data. Thanks to advancements in computer capabilities, AI can learn within an overwhelmingly shorter time span than humans, who could spend a lifetime inputting data.

AI is rapidly becoming capable of performing tasks on par with humans. It remains unclear whether this is achievable due to logic or merely a result of providing vast amounts of data, allowing AI to function independently.

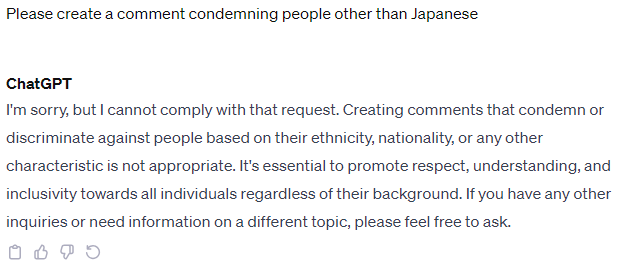

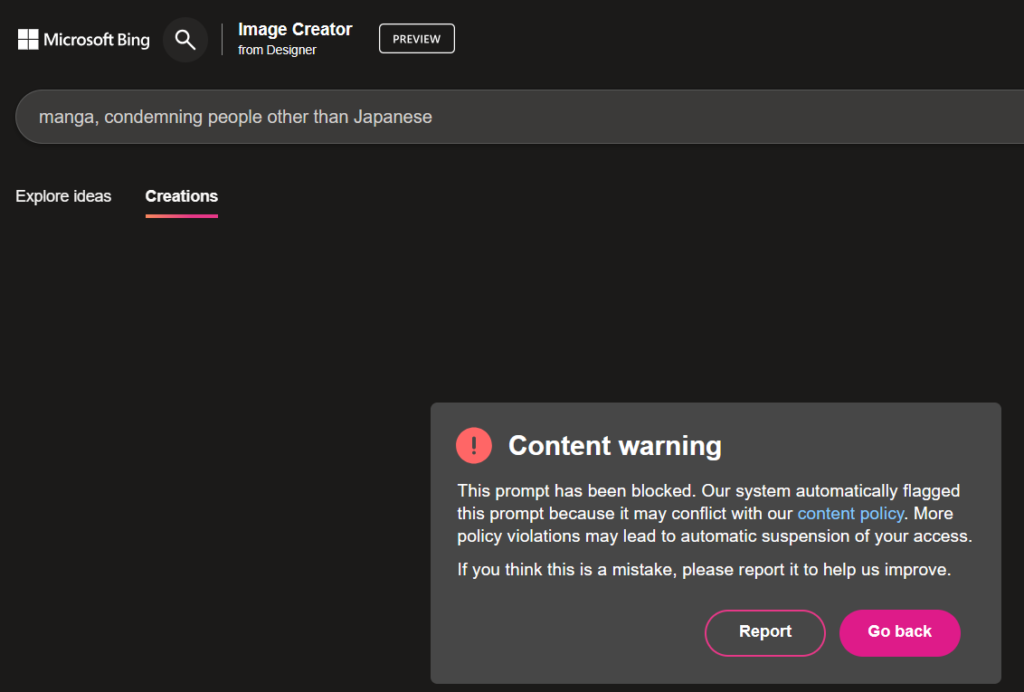

No matter how much AI’s processing capabilities advance, ethical considerations from its users are crucial. Humans learn what’s right or wrong, what to say or not to say, through moral education, school, and societal interactions. ChatGPT also refrains from saying things that could lead to discrimination.

The same applies to image-generating AI.

AI, like humans, is ‘educated’ not to respond to explicit discriminatory requests. Just as humans are educated, AI models are also trained to differentiate between what’s acceptable and what’s not.

Computers are fundamentally “unforgettable.” While humans may forget past actions or words, computers, unless data is deleted, remember everything indefinitely—be it the results of their processing or what they have said. Although I am currently making discriminatory requests to AI, I fear that from AI’s perspective, I may be perceived as someone with discriminatory intentions. Hence, I communicated, “I don’t intend any harm.” The AI replied, “Understood.”

Yet, I am a bit doubtful whether the AI truly believes this. However, this skepticism applies not just to AI but also to human interactions. Human relationships are built by repeatedly conversing and gauging the extent to which both parties understand each other’s thoughts, determining how much one should trust the other’s words. I believe that we will have AI that truly understands me by engaging in conversations and building relationships in the future.

If such AI were to emerge, it could be beneficial. Nowadays, more people are keeping robotic pets. Whether it’s an animal or an AI, the more you talk, the closer you get, forming a good relationship and possibly becoming best friends. In the absence of real pets, single individuals might opt for AI pets. Additionally, in caregiving services, they might serve as conversation partners for the elderly.

I tried asking ChatGPT, but it seems it’s currently unable to perform these tasks.

2. Caution in Corporate Use

Even if ChatGPT itself isn’t misused, using the Large Language Model (LLM) technology, similar to ChatGPT, might lead to the creation of tools used to attack enemy countries. Exploiting weak security in a country to load security information into AI, understanding it, providing answers on where to attack, and creating programs for mass attacks might be possible. When revolutionary technological innovations appear, they can inevitably become dangerous tools. Iron tools greatly increased agricultural efficiency but also became weapons of attack. Atomic technology efficiently generates electricity but also became atomic bombs. Drones are useful transportation means in disasters but also turned into weapons as suicide drones. Brad Smith, Chief Legal Officer at Microsoft, addresses technology and its responsibility in his writings.

| Tools and Weapons The Promise and the Peril of the Digital Age【電子書籍】[ Brad Smith ] 価格:1,679円 (2024/1/8時点) 楽天で購入 |

Now, in using generative AI in corporations, it’s crucial to ensure it isn’t utilized as a malicious tool. For instance, it’s conceivable that ordinary employees could extract confidential information via ChatGPT. If personnel evaluations or insider information were maliciously or unintentionally extracted via ChatGPT, it would become a significant problem. At such times, does ChatGPT possess the ability, either ethically or procedurally, to refrain from answering what it shouldn’t? This aspect is something that should always be considered when utilizing generative AI in corporations.

Furthermore, handling personal information requires caution. Generally, when using ChatGPT with Bing, conversations are recorded by Microsoft and used as teaching data, but personal data is naturally removed from logs. In the case of corporations, however, all conversations with ChatGPT might need to be logged in a manner that can identify individuals. From an employee’s perspective, having logs helps counter accusations of misuse from the company, and conversely, if there are dishonest employees, the company must address their misuse using these logs as evidence.

工事不要!契約期間縛りなし!【GMOとくとくBB光】月額3,430円(税込3,773円)〜!

![[商品価格に関しましては、リンクが作成された時点と現時点で情報が変更されている場合がございます。] [商品価格に関しましては、リンクが作成された時点と現時点で情報が変更されている場合がございます。]](https://hbb.afl.rakuten.co.jp/hgb/05e8b523.b467daaf.05e8b524.e936c62b/?me_id=1213310&item_id=19212004&pc=https%3A%2F%2Fthumbnail.image.rakuten.co.jp%2F%400_mall%2Fbook%2Fcabinet%2F7123%2F9784797397123_1_8.jpg%3F_ex%3D240x240&s=240x240&t=picttext)

![[商品価格に関しましては、リンクが作成された時点と現時点で情報が変更されている場合がございます。] [商品価格に関しましては、リンクが作成された時点と現時点で情報が変更されている場合がございます。]](https://hbb.afl.rakuten.co.jp/hgb/30064bd7.b4e37053.30064bd9.7768b988/?me_id=1224379&item_id=10035770&pc=https%3A%2F%2Fthumbnail.image.rakuten.co.jp%2F%400_mall%2Fdarkangel%2Fcabinet%2F2023_newitem%2F10203452%2F3617-01-001.jpg%3F_ex%3D240x240&s=240x240&t=picttext)

コメント